Runtime Management: Your Execution Environment ⚙️

In PyRun, the Runtime is the specific environment where your Python code executes during a job run on your AWS account. It dictates the Python version, available packages, and potentially system-level tools. PyRun simplifies runtime creation and management, letting you focus on dependencies while it handles the build process.

What is a Runtime?

A runtime, in the context of PyRun, is the environment in which your code executes. It includes:

- Python Interpreter: The specific version of Python used (e.g., Python 3.9, 3.10).

- Python Packages: The libraries and modules available to your code (e.g., pandas, NumPy, scikit-learn, Lithops, Dask).

- System-Level Dependencies: (Optional) Underlying operating system libraries and tools that your code might require.

- Base operative system: The operative system to run all the other components.

PyRun provides pre-built runtimes for common scenarios, but you can customize your runtime to meet your specific project needs.

How to Customize Your Runtime

You define your desired runtime environment within the .pyrun/ directory of your workspace. PyRun automatically detects changes here and rebuilds the runtime. There are two methods:

1. Using environment.yml (Recommended)

This is the easiest and most common way, suitable for most Python package needs. Create or edit the .pyrun/environment.yml file to define a Conda environment.

Structure:

name: pyrun-environment # Can be any name

channels:

- conda-forge # Specify conda channels

- defaults

dependencies:

- python=3.10 # Specify Python version

# Conda packages:

- pandas>=1.3

- numpy

# Pip packages:

- pip:

- requests

- scikit-learn==1.0.*

- $LITHOPS # Essential if using Lithops features in PyRun!

# - any-other-pip-package- Specify Python Version: Use

python=X.Y. - Add Conda Packages: List under

dependencies. - Add Pip Packages: List under the

pip:section. - Use

$LITHOPS: If using Lithops, you must include$LITHOPSin thepipsection. PyRun substitutes this with the correct, integrated Lithops version during the build. This is added automatically in Lithops templates.

2. Using Dockerfile (Advanced)

For full control, including installing system-level dependencies (e.g., via apt-get) or using a specific base OS image, replace .pyrun/environment.yml with a .pyrun/Dockerfile.

Example Dockerfile:

# Start from a base Python image

FROM public.ecr.aws/docker/library/python:3.10-slim-buster

# Install system dependencies

RUN apt-get update && apt-get install -y --no-install-recommends \

gcc \

git \

# Add any other needed system packages here

&& rm -rf /var/lib/apt/lists/*

# Set up the function directory (standard for some cloud function environments)

ARG FUNCTION_DIR="/function"

RUN mkdir -p ${FUNCTION_DIR}

WORKDIR ${FUNCTION_DIR}

# Copy requirements (optional, can install directly)

# COPY requirements.txt .

# Install Python packages using pip

# Ensure LITHOPS integration variable is available if needed

ARG LITHOPS

RUN pip install --no-cache-dir \

pandas \

numpy \

requests \

"$LITHOPS" \

# Add other pip packages here or install from requirements.txt

# If using requirements.txt: RUN pip install --no-cache-dir -r requirements.txt

# Ensure Python output is not buffered

ENV PYTHONUNBUFFERED=TRUE

# (Optional) Define entrypoint or command if needed

# CMD ["python", "your_script.py"]- PyRun detects the

Dockerfileand uses Docker to build the image. - Make sure to handle Python package installation within the Dockerfile (e.g., using

pip install). - If using Lithops, ensure the

$LITHOPSbuild argument is used correctly duringpip install.

The Automatic Build Process

PyRun makes runtime updates seamless:

- Save Changes: Modify and save your

.pyrun/environment.ymlor.pyrun/Dockerfile. - Detection: PyRun detects the file change.

- Rebuild Prompt: You'll be prompted to confirm that you want to rebuild your runtime.

- Cloud Build: PyRun initiates the build process in the cloud:

- YML: Creates a Conda environment and installs packages.

- Dockerfile: Builds the Docker image.

- Real-time Logs: The "Logs" tab in your workspace switches to show the live output of the build process. You can monitor package installation or Docker build steps. Errors during the build will be shown here.

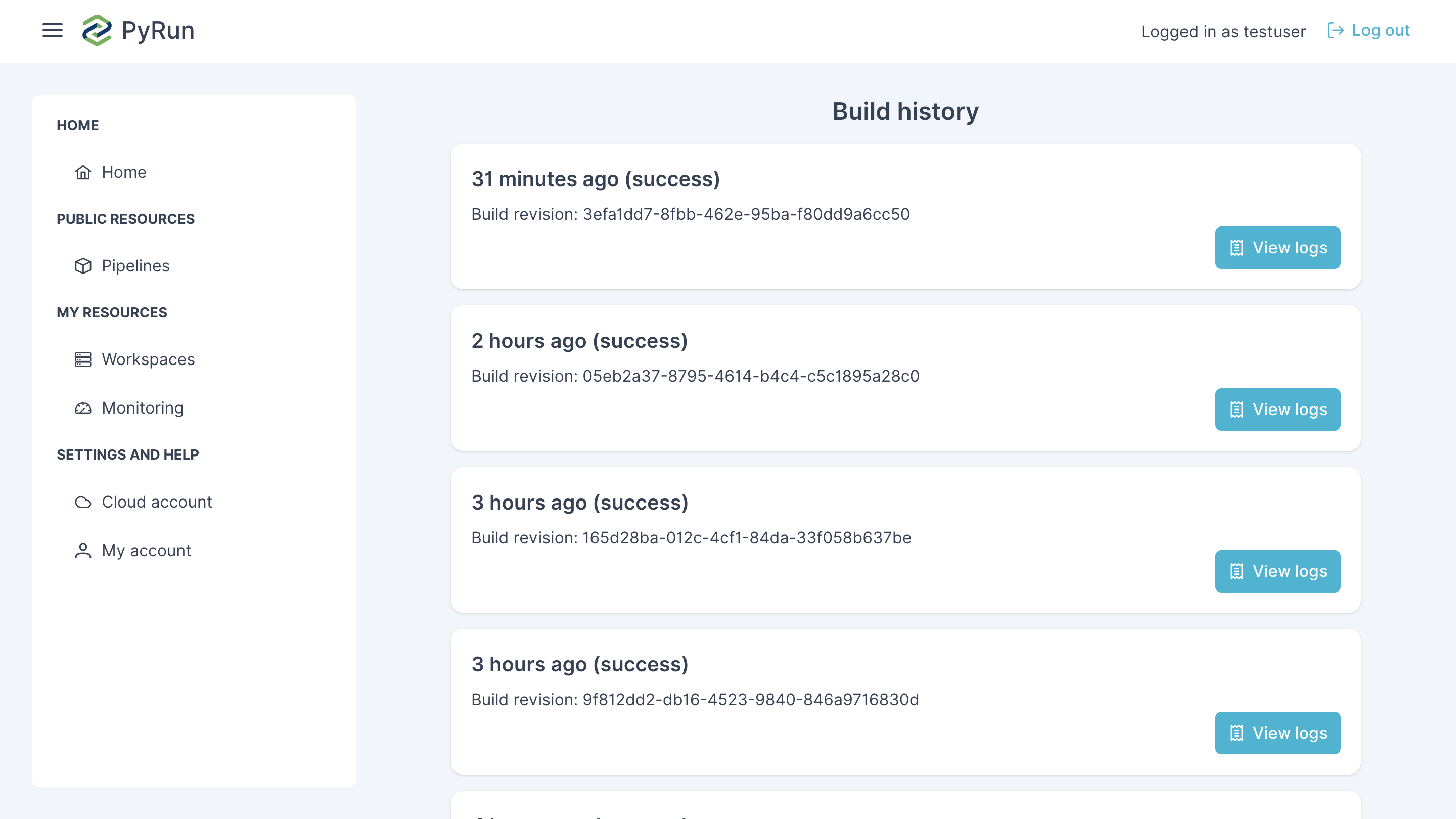

- Build History: Past build logs are often accessible within the workspace or dashboard for review.

- Automatic Reload: Once the build completes successfully, your workspace may automatically reload or indicate the new runtime is ready.

- Execution: Your job then executes using the newly built, custom runtime.

Important Considerations

- Persistence: Packages must be defined in

environment.ymlorDockerfileto be included in the persistent runtime used for job execution. - Workspace Terminal: Using

pip installorconda installin the workspace terminal only affects that interactive session. These packages will not be available when you click the "Run" button for a job. - Build Times: Complex environments or many packages can increase runtime build times. Keep your environment focused on necessary dependencies.

Best Practices

- Start with

environment.yml: It's simpler for most Python package management. - Use

Dockerfilefor System Needs: Switch to Dockerfile if you needapt-getinstalls or specific base images. - Pin Versions: Specify package versions (e.g.,

pandas==1.5.3,requests>=2.20) in your YML orrequirements.txt(for Dockerfile) for reproducible builds. - Keep it Lean: Only include packages essential for your code to minimize build times and potential conflicts.

- Test Your Runtime: After a rebuild, run a simple script that imports your key dependencies to ensure the environment is correct.

By understanding and utilizing PyRun's runtime management, you can ensure your code always runs with the exact dependencies it needs, deployed automatically and efficiently.